ausstorageguy

Latest Post

Why I belive you should stop worrying about SPC-1 benchmarks.

First up, I want to make it very clear that in no way am I questioning the quality of the products mentioned in this post. I have been involved in the implementation of most of them and I believe they are quality arrays.

###

FTC Disclosure: I am NOT employed by any vendor and receive no compensation from any vendor with exception of the following:

· EMC – Various USB Keys, 1 x Iomega HDD, 1 x decent rain jacket, 1 x baseball cap, several t-shirts and polo’s, a few business lunches and dinners (not in relation to this blog), 1 x bottle opener keyring, pens.

· NetApp – Various USB Keys, 1x multi-tool and torch, 1 x baseball cap, several t-shirts and polo’s, a few business lunches and dinners (not in relation to this blog), 1 x bottle opener keyring (just like EMC J ), pens, playing cards, clock.

· HDS – Various USB Keys, 1 x baseball cap, several t-shirts and polo’s, stress ball.

· Compellent and Dell Compellent – Various USB Keys, 1 x baseball cap, several t-shirts and polo’s

· IBM – Various USB Keys, 1 x baseball cap, several t-shirts and polo’s, stress ball (Ironic really).

· HP – Various USB Keys, 1 x baseball cap, several t-shirts and polo’s, brolly, stress ball.

· Most garnered as gifts or prizes at conferences.

I hold no shares in any IT vendor – although donations are graciously encouraged; however no influence shall be garnered from them.

###

Storage performance is one of the many considerations a business should make when deciding on an appropriate storage solution to meet the requirements, but are benchmarks such as the SPC-1 benchmark the best approach?

I have a few issues with the SPC-1 Benchmark, but the biggest problem is the lack of disclosure, not so much in the “Full disclosure” reports that accompany the results, but with the lack of

information available about the benchmark process.

Steve Daniel at NetApp was quoted as saying: “We don’t see customers ask for SPC or SP EC numbers, one of the primary reasons we continue to publish SPEC SFS benchmarks is for historical reasons; we can’t just stop without raising questions.”

EC numbers, one of the primary reasons we continue to publish SPEC SFS benchmarks is for historical reasons; we can’t just stop without raising questions.”

Personally, I think questions are good; so long as the answers are better.

In this instance, why continue with a benchmark which is near impossible to translate to a business case?

What is the relevance of the results to you and your business needs?

You see, there is little to no detail about the process: what its baseline is or the metrics measured in the benchmark; which, as far as I’m concerned limits the ability of business to discern the relevance of the benchmark to their environment.

I know these metrics, methods and details exist, however, in order to obtain them, you need to sign up as a member, the costs of which range between USD $500-6000, and should you sign up; I’m of the understanding that once signed up, you’re under a Non Disclosure Agreement and therefore cannot divulge the information garnered. (Please, someone correct me on this if I’m wrong)

The “Full disclosure” reports available provide details about the Bill of Materials, the configuration and the results, but don’t define how they came to these results.

Put it this way, when I was many years younger and many more kilo’s lighter, I use to sprint an 11.02 seconds average.

11.02 seconds for what exactly? Without that bit of detail, the 11.02 is irrelevant, was it 10 meters, 100 meter, 110m hurdles, swimming, walking on my hands backwards?

For the record, it was 100m track, but then what were the conditions? Was it wet, dry, windy? Did I have spikes on and was it a grass or synthetic track, up-hill, down-hill or perfectly flat?

None of the conditions in the storage benchmarks are available to the average, non-subscriber; so how does one determine if the measurement and the results are relevant to them or not?

In order to be able to understand how a benchmark may be relevant to your business, you need to know the conditions of which the results were achieved.

What is the relationship between an IOPS and Latency result and how many users and applications the storage array will support in a timely manner?

No two environments and conditions are the same.

Usain “Lightning” Bolt is an amazing man, the fastest man in the world; with amazing 6 Olympic gold medals and a world record 9.58 seconds for the 100m sprint, which makes it  look like I did my time push-starting a bus with a gammy leg. He is a real inspiration!

look like I did my time push-starting a bus with a gammy leg. He is a real inspiration!

But, could the “Lightning bolt” – as formidable as he is – achieve the same result, running though a packed shopping centre pushing a loaded trolley? He’d be quicker than you or I; I’m certain of that, but no, that’s a different circumstance than a professional running track in an Olympic stadium.

That’s another issue I have with the results; there is very little reflection or comparison to a real-life environment.

Most of the results available are quite often 1-to-1 relationships between the workload generator (WG) and the storage array, occasionally there are a few results using LPARS or VPARS (Partitioned servers) and/or multiple servers, but nothing in terms of server virtualisation; no VMware, no Hyper-V and no XEN clusters, or even groups of servers with workloads similar to yours.

The IOPS results from the SPC-1 benchmark may as well be flat-out, straight-line achievements because there is no way  for you to translate x number of IOPS into your environment – your VMware/Exchange servers/MS SQL/MY SQL/Oracle/SAP/PeopleSoft and custom in-house

for you to translate x number of IOPS into your environment – your VMware/Exchange servers/MS SQL/MY SQL/Oracle/SAP/PeopleSoft and custom in-house

developed environment.

Can you use the results to determine whether the array will suit Exchange 2003 or Exchange 2010, both with very different workloads?

Additionally, there are no results with remote, synchronous replication involved, none with asynchronous remote replication either for that matter, which would directly affect latency.

And where are the results employing tiering, compression, dedupe and although there are a few results using thin provisioning (only 2-3 I think), there aren’t many, even though almost all vendors support these features. I would imagine that these are the kinds of features most businesses do or would want to use in a real-life workload.

How can the SPC-1 Benchmark reports represent anything akin to your environment when there is no environment alike? Even if you did implement the exact same configuration of storage, storage networking and server/s to your computing requirements and applications; the chances of achieving the same IOPS result would be next to nothing.

Creditability of the results.

How can the SPC have any credibility when they allow an array vendor to directly manipulate results? Why are there no standards for the testing hardware, OS and software, why is there no scrutiny of sponsored competitive tests by the SPC and for that matter, why is there no scrutiny of sponsors own tests and hardware?

I wrote in my (rather short) piece “Dragging up ancient history – How NetApp fooled everyone (FAS3040 v. CX3-40)” about how I believe NetApp engineered the configurations of FAS and the ClarIIon arrays to ensure that the NetApp FAS results looked better; and various people came out of the woodwork, trying to educate me on how to read a benchmark, or how the SPC-1 benchmarks were audited; however, since the workload generators are different in each case, there is no standard to which the comparison can be made.

Different OSes and volume/file managers handle read and write workloads differently, some are more or less tolerant, some cache, some don’t; some switches handle traffic different to another; same goes for HBA’s and CPU’s/Memory and Busses. So if the WG is different each time, then how can there ever be a credible comparison?

When writing my original post, I came across some very interesting remarks in the “Full disclosure” reports, including this:

| priv set diagsetflag wafl_downgrade_target 0setflag wafl_optimize_write_once 0 |

Which NetApp wrote in http://media.netapp.com/documents/tr-3647.pdf:

| “4. Final Remarks This paper provides a number of tips and techniques for configuring NetApp systems for high performance. Most of these techniques are straightforward and well known. Using special flags to tune performance represents a benchmark-oriented compromise on our part. These flags can be used to deliver performance improvements to customers whose understanding of their workload ensures that they will use them appropriately during both the testing and deployment phases of NetApp FAS arrays. Future versions of Data ONTAP will be more self-tuning, so the flags will no longer be required. ” |

And the storage performance council wrote in their guidelines http://www.storageperformance.org/specs/SPC-1_v1.11.pdf:

| 0.2 General GuidelinesThe purpose of SPC benchmarks is to provide objective, relevant, and verifiable data to purchasers of I/O subsystems. To that end, SPC specifications require that benchmark tests be implemented with system platforms and products that:…3. Are relevant to the market segment that SPC-1 benchmark represents.In addition, all SPC benchmark results are required to be sponsored by a distinctly identifiable entity, which is referred to as the Test Sponsor. The Test Sponsor is responsible for the submission of all required SPC benchmark results and materials. The Test Sponsor is responsible for the completeness, accuracy, and authenticity of those submitted results and materials as attested to in the required Letter of Good Faith (see Appendix D). A Test Sponsor is not required to be a SPC member and may be an individual, company, or organization.The use of new systems, products, technologies (hardware or software) and pricing is encouraged so long as they meet the requirements above. Specifically prohibited are benchmark systems, products, pricing (hereafter referred to as “implementations”) whose primary purpose is performance optimization of SPC benchmark results without any corresponding applicability to real-world applications and environments. In other words, all “benchmark specials,” implementations that improve benchmark results but not general, realworld performance are prohibited. |

I’m not intentionally picking on NetApp here, I just happened to have that example handy. Sorry NetApp folk.

How can the council maintain creditability when the system is cheated? The flags were noted in the “Full disclosure” report, but the actual use case was not?

My Issue here is not a question of the council’s credibility – I can see the issue is not the Auditors fault – but the dependency on “Good Faith”; it seems common practice now of vendors to submit their configurations, masked behind complex configurations or scripts, hiding the details from the common folk. This practice of scripts is common practice for a field engineer installing an array; however, it makes very difficult for the average punter to dig deeper to find the truth.

Here’s another example, the HP 3Par configuration below, like the NetApp example above obscures the little detail of configuration for benchmarks, this one was discovered by Nate Amsden of the blog techopsguys:

http://www.techopsguys.com/2011/10/19/linear-scalability/#comments

| createcpg -t r1 -rs 120 -sdgs 120g -p -nd $nd cpgfc$ndcreatevv -i $id cpgfc${nd} asu2.${j} 840g;createvlun -f asu2.${j} $((4*nd+i+121)) ${nd}${PORTS[$hba]} |

Here you’ll see in red, that I’ve highlighted where HP have pinned the volumes to controller pairs and LU’s (LUNs) to ports on the controllers – This is not the typical configuration that HP will sell you.

If this configuration, HP are bypassing the interconnect mesh; which, by HP’s own description says:

“The interconnect is optimized to deliver low latency, high-bandwidth communication and data movement between Controller Nodes through dedicated, point-to-point links and a low overhead protocol which features rapid inter-node messaging and acknowledgement.”

If that’s the case – and I know it is – then why not use it?

Who uses only 55% of their configured capacity?

The SPC-1 benchmark specifies that the unused capacity be less than 45% of the Physical Storage Capacity:

Source: http://www.storageperformance.org/specs/SPC-1_v1.11.pdf

In the old days of short stroking disks this may have been relevant, but with modern storage arrays with flash/SSD caches and storage tiering, there should no longer be much of a need.

Now, maybe it’s a case of my customer base and that of my colleagues as well, but in this economic climate most customers’ storage arrays tend to have a very high capacity utilisation; the  lowest I’ve encountered recently was close to 70% utilisation with the expected remaining capacity to be used in the next 3-6 months.

lowest I’ve encountered recently was close to 70% utilisation with the expected remaining capacity to be used in the next 3-6 months.

I’d imagine that most businesses would be the same.

The other side is that, this is tested against the configured capacity, not the total potential capacity of the array as available at the time of the test.

Most storage arrays performance degrades as the capacity utilisation increases and it’s not always linear, some might run in a fairly straight line, others might drop-off at 90% others not until 100%, so why not test it to the end?

Why not also benchmark the full capability of the array?

The trouble with disks is, the closer you get to the centre, the lower the IOPS; to use the entire radius of the disk end to end is full stroking; SNIA define short-stroking as: “A technique known as short-stroking a disk limits the drive’s capacity by using a subset of the available tracks, typically the drive’s outer tracks.”

Looking arround the various submissions, I can see that there are many cases of under utilisation of the storage arrays tested and it makes me wonder – what would happen to the results if the used the lot, and then tested it to full capacity.

Shouldn’t they be realistic configurations?

Many of the vendors submitting arrays for benchmarks state that the configurations are as bought by real customers; however, I have a problem with that.

Just because they may have been bought, it does not make such configurations a “Commonly bought configuration”.

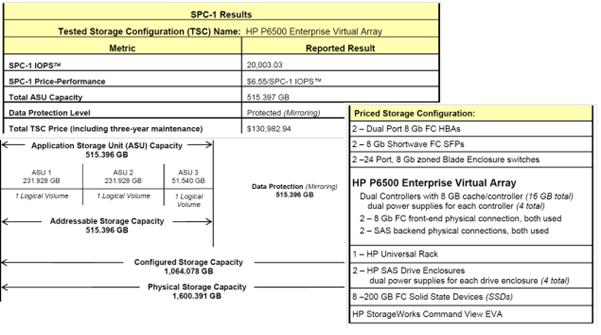

Take a look at the configuration of this HP EVA P6500 for example:

Who exactly spends $130,000 USD for a HP EVA 6500 with only 8x200GB SSDs delivering only 515GB of capacity and only achieving 20,003 IOPS? – It’s just not realistic.

This isn’t the only example; there are dozens of such examples just like the one above.

However, in reality, I just don’t see how these configurations are justifiable.

Hitachi VSP submitted 1st November 2011

Hitachi VSP submitted 1st November 2011

So, what should a benchmark look like?

I would never proclaim to know all the answers, however, to begin with; one which can be easily translated into real-life examples:

· MS SQL, MY SQL, Oracle etc.

· MS Exchange 2003/2010

· SAP, PeopleSoft

· VMware

· File Servers (or as a NAS)

All tested in 100-250, 251-500, 501-1,000, 1,000-4,000, 4,001-10,000 and 10,001-25,000 user configurations.

Real translatable workloads; which a decision maker can utilise to determine if, not only the array vendors and products suit their needs, but also the configurations as well.